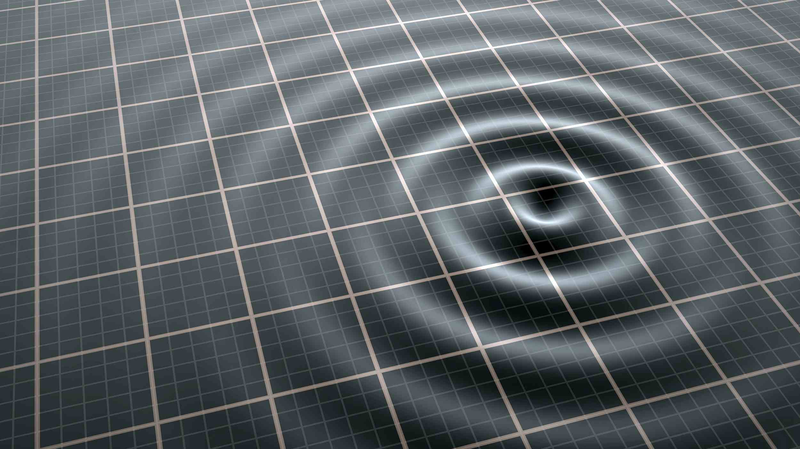

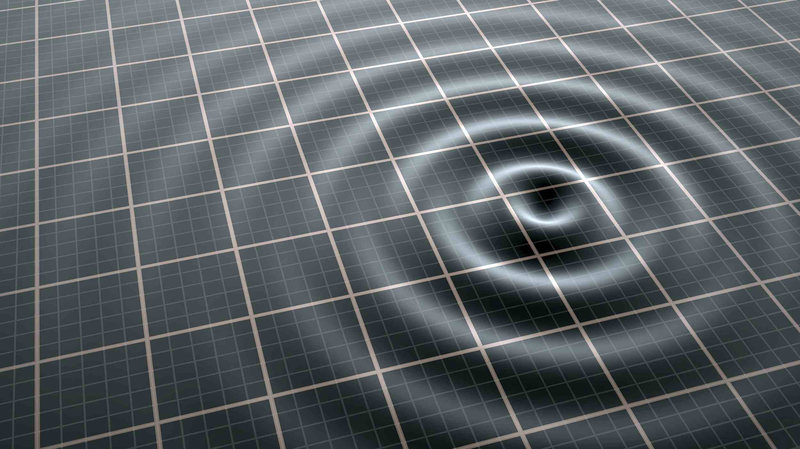

A powerful magnitude-7.2 earthquake struck off Russia’s Kamchatka Peninsula early Sunday, triggering tsunami warnings across the Pacific region. The quake, followed by a series of strong tremors including a magnitude-6.6 event, occurred just 10 kilometers below the ocean floor near Petropavlovsk-Kamchatsky 🌏💥.

The U.S. Geological Survey (USGS) issued an urgent tsunami alert, warning of "hazardous waves" within 300 km of the epicenter. While initial reports show no immediate damage, authorities are urging coastal residents to stay vigilant 🚨.

Kamchatka, known for its volcanic landscapes and extreme weather, sits on the seismically active "Ring of Fire." This latest quake adds to a string of recent tectonic activity in the area—a reminder of nature’s unpredictable power 🌋🔍.

Source: China Earthquake Networks Center (CENC), USGS

Reference(s):

Tsunami alert issued after powerful earthquakes hit off Russia's coast

cgtn.com